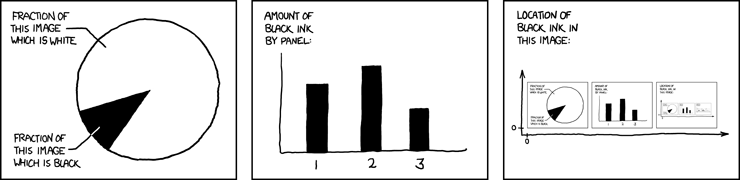

A fun fact about predicting your own behavior, particularly publicly, is that the act of predicting it changes the prediction. “I’m 75% likely to maintain my Duolingo streak all year, but now that I’ve said so I’m actually 90% likely, but now that I’ve said that, …” Or what happens when the probability starts very low but you add a wager? It’s like this self-describing xkcd:

Or like the sentence, “This sentence consists of exactly fifty-seven characters.” [1]

(This, incidentally, is part of what’s hard about predicting whether superhuman AI will accidentally destroy everything humans value in the universe. If we actually had consensus on the probability being high, we’d be galvanized to change course. It’s like if you knew you had a high chance of accidentally taking a fatal dose of medicine, you’d double check the dosage and make the probability low again. Not that I’m arguing against sounding alarm bells.)

Anyway, a fun fact about Beeminder, from way back in the Kibotzer days, is that it started as essentially a prediction market.

You can hear Bethany tell the story in an ancient Quantified Self talk from when Beeminder was a side project. Back then, instead of automatically paying Beeminder when you went off track, Beeminder just showed you a graph of your progress and your commitment. The commitment contracts themselves were arranged separately. The inaugural commitment contracts were Bethany and me staking $2,000 each on a gallery of personal fitness and productivity goals during the summer of 2010:

So what did “staking $2,000” mean? We were each promising to pay some friend or family member that $2k if we didn’t stay on track on all of those goals. Which friend or family member was determined by auction, coordinated manually by email at first. We started the bidding at $10, it quickly went to $50, and then we sold them for $65 each the next day.

That meant that our friends were paying $65 for the chance to win $2k. Which implies market odds of 96.8% that we’d succeed at our goals that summer. Which we did, phew. Obviously the probability would not have been nearly so high without the money at stake.

Emboldened by that trial, we set up a slightly more formal auction interface for other people to use:

That one turns the game theory up to eleven. The commitment contract there was my sister Melanie’s weight loss goal, with $600 of her money at stake. The bidders specify in the first row of that table the most they’d pay to be the beneficiary of the commitment contract. The auction mechanism then does a bunch of math [2] to decide how much people actually pay (second row) and to divvy the potential booty (third row). As it turned out Laurie, our mother, got half the contract — half of Melanie’s $600 should she have failed at her goal. David Yang got 25%, and Dan Goldstein got the remaining 25%. David Reiley, Bethany, and I didn’t bid enough to get anything. And then, once again, Melanie did in fact stay on track so those shares didn’t end up paying out. Each person’s bid implies the odds they assign to Melanie actually staying on track on her weight goal. The bottom right number — 2.5% — is the aggregated market odds.

Today, Beeminder only offers infinitely bad odds. You pay for going off track, but don’t get paid for staying on track. You could think of that as perfectly fair odds if using Beeminder meant a 100% chance of always staying on track. It doesn’t but these days we view this all very differently, largely because staying on vs going off track is not actually a binary outcome. You’ll inevitably go off track sometimes and that’s fine — you’re really just paying for the motivation Beeminder is providing the rest of the time. We have a whole series of blog posts about this: Derailing Is Not Failing, Paying Is Not Punishment, and Derailing It Is Nailing It.

Probably if you’re reading to the end of Beeminder blog posts you’re already on board with all that. [3] But if you’re a prediction market person, we’d actually love to convince you to try predicting your own behavior via Manifold markets. (Disclosure: I’m an investor in Manifold.) Here are some examples of people (including us) doing so:

Last but not least, I want to launch a new feature to allow Manifold users to transfer mana (that’s Manifold’s play-money currency) to honey money (Beeminder’s currency, aka store credit on Beeminder) and vice versa in time to talk about it and use it at Manifest!

[1] If you’re wondering how I made that sentence come out right, I just let Mathematica brute-force it:

For[i = 2, i < 1000, i++,

s = "This sentence consists of exactly "<>

IntegerName[i]<>" characters.";

If[StringLength[s] == i, Print[i, ": ", s]]]

I then thought to let GPT-4’s Code Interpreter have a shot at it. It gets there, with minimal coaxing. As of 2023, this feels utterly gobsmacking.

[2] If you really want to know, we have an ancient document about it. If I had to justify it, it started with the idea to let everyone buy in at any amount of money they chose. Call that the pot. Then you divvy up shares of the contract (the money you win if the person auctioning off the commitment contract doesn’t do what they committed to) by just giving each person a fraction of the contract equal to the fraction they contributed to the pot. The more you put in, the more of the payout you get (if the contract pays out). It’s like a parimutuel market.

But then! This is where the wild game theory comes in. Deciding how much to pay into the pot is a tricky strategic problem that depends pretty delicately on what other people are paying in.

Say two other bidders have put in $100 each on a $1000 contract. And let’s say the person creating this commitment contract is doomed, as in, you’re 100% certain they’ll be paying that $1000. So those other bidders are currently getting $500 each by spending $100. If you put in $100 as well then the three of you will get $333 each. This is still profitable and you want to put more into the pot, but at about $222 you start losing money. Each person putting $222.22 into the pot turns out to be the Nash equilibrium of this game.

So the idea of this mechanism is to compute those equilibrium bids for everyone. You just say how much you think the whole contract is worth, and the mechanism plays the Nash equilibrium for you, under the maybe-false assumption that everyone has said their true value for the whole contract. Bidders don’t have to understand any of that. They can just keep bumping up their bids as long as the mechanism is giving them a price and a fraction of the contract that feels fair.

It’s all preposterous amounts of overkill/overcomplication but it did seem to work.

[3] To recap the argument, if you view Beeminder’s stings as punishment, that can (a) encourage an excuse-making mindset over a results-oriented mindset, and maybe more importantly, (b) encourage you to make your goals less ambitious. If you accept that some derailments are inevitable — part of the cost of the service, just nudges or rumble strips keeping you on track — you can dial in a sweet spot where you’re being pushed to do as much as possible (or whatever maximizes the motivational value you get from Beeminder) at minimal cost. See also Yassine Meskhout’s notion of taxing vs punitive commitment contracts.